From new processor technologies to quantum computing, 2016 promises to be another exciting year for supercomputing. Here are five predictions as to how the industry will push ahead in 2016:

The year of progress in new processor technologies

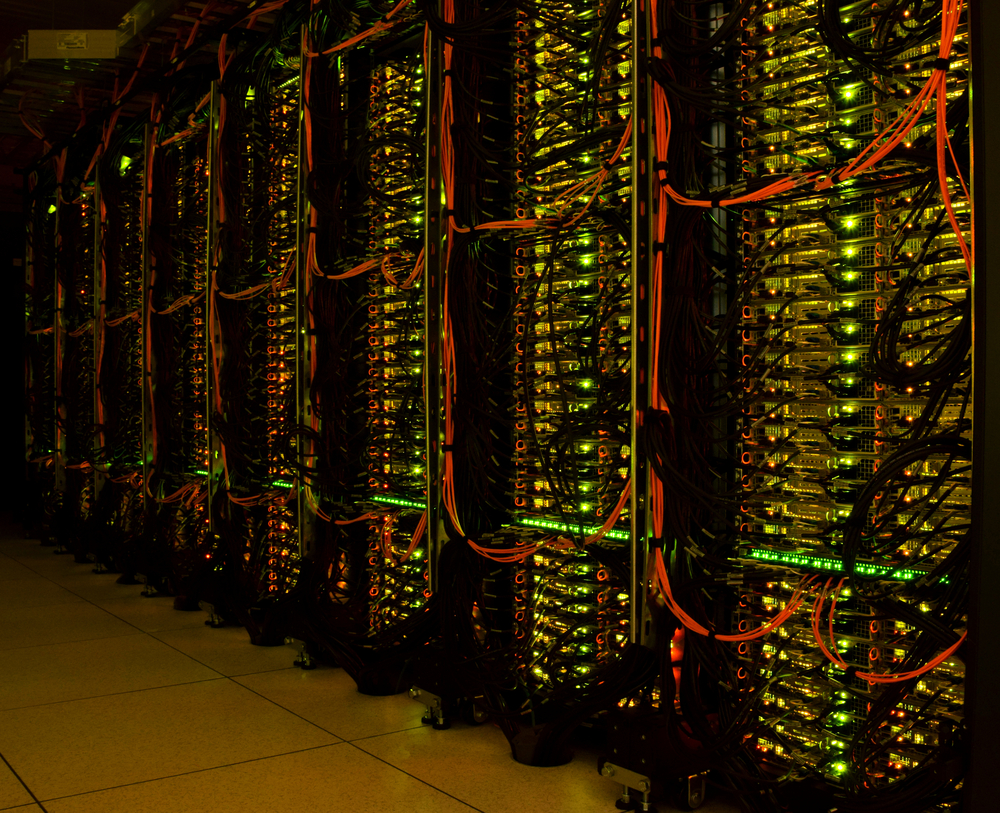

There are a number of exciting technologies we should see in 2016, and a leader will be Intel’s next-generation Xeon Phi coprocessor – a hybrid between an accelerator and general purpose processor. This new class of processors will have a large impact on the industry with its innovative design that combines a many-core architecture with general-purpose productivity. Cray, for example, will be delivering Intel Xeon Phi processors with some of our largest systems, including those going to Los Alamos National Labs (the “Trinity” supercomputer) and NERSC (the “Cori” supercomputer).

2016 should also see innovation and advances by NVIDIA with their cutting-edge Pascal GPUs, and the continuing ARM innovations will also drive a competitive environment in the processor game.

A quantum leap

Quantum computing will both be/not be an exciting technology in 2016. Now that Google has confirmed that their system from D-Wave is performing quantum annealing, we expect more headlines to follow, but by the end of the year the industry will still struggle to understand exactly what it is, or what it will be most useful for. This is certainly a new and exciting technology and the excitement it generates is good for the industry, but it still won’t be a truly productive technology in 2016, or 2020, or perhaps even 2025 for that matter.

2016 – the year for data-tiering?

We’ve seen an explosion in the past few years in the usefulness of solid state devices and associated technologies, as well as dramatic price-performance improvements. This shift is causing some concern over how to easily manage data that is moved through multiple and complex layers – from disks to solid state storage to non-volatile memory (NVM) to random-access memory. In spite of these complexities, we will see a lot of cool, new technologies, solutions and systems coming to the market in 2016.

This technology has already created one very positive trend in cost-effectiveness: if you want bandwidth, you buy bandwidth; if you want capacity, you buy capacity. The difficult part is actually managing the data movement between these layers efficiently. So a lot of software development has to be done in order to take advantage of these cost savings. Solutions like DDN’s Infinite Memory Engine, EMC’s (oops, I mean Dell’s) DSSD-based product and Cray’s own DataWarp product are a few examples of attempts to combine software and hardware innovation around data-tiering, and 2016 will see some blazing-fast solutions and advances in this technology.

Coherence of analytics and supercomputing

Coherence and convergence. It seems like everyone is talking about it, including the U.S. Government in its National Strategic Computing Initiative, the Human Brain Project in the UK, and every major commercial and academic player in high performance computing. But it’s not all just talk. Big data is changing how people use supercomputing, and supercomputing is changing how people handle big data. Whether this is using supercomputers for baseball analytics, or using IoT sensor data to model weather and climate -- we now see that analytics and supercomputing are inextricably and forever intertwined. In 2016 we will continue to see this coherence grow and bear productive fruit.

We will finally abandon Linpack as the metric for the Top500… just kidding

Since 1993, the Top500 has been the metric of choice for press and public to be able to understand how supercomputing is changing and evolving. That being said, it has never truly been a good measure for whether supercomputers are actually important in our lives. It simply doesn’t measure whether a supercomputer is productive or not.

The Top500 does allow us to see, retrospectively, the evolution of technologies that are being delivered in the governmental and commercial spaces. Unfortunately, it doesn’t tell us much about where technology is going or whether these systems are useful and productive. There is a key benefit of the Top500 that is actually very important and one that should not be minimized -- the Top500 is easy to understand. As much as I don’t believe in the Top500, I must say that I admire its simplicity and its ability to focus discussion on the big computing challenges we face. So my prediction is that the Top500 will still be the genesis for proud, chest-thumping by governments, institutions of higher learning and commercial companies in 2016. All I can say is, Happy New Year Top500! Maybe we will have better metric someday, but for now, you are the best we have.