The Canonical Data Platform team has released Charmed Kubeflow 1.4 – the state-of-the-art MLOps platform. The new release enables data science teams to securely collaborate on AI/ML innovation on any cloud, from concept to production.

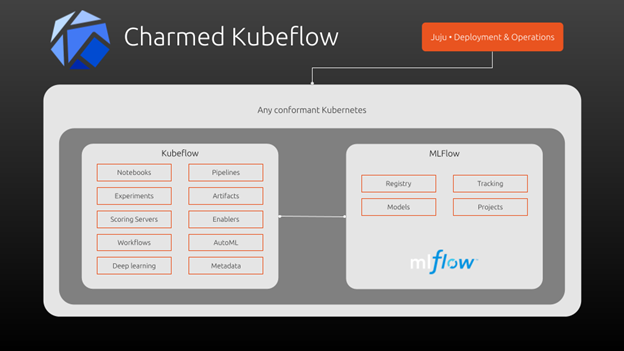

Charmed Kubeflow is free to use: the solution can be deployed in any environment without constraints, paywall or restricted features. Data labs and MLOps teams only need to train their data scientists and engineers once to work consistently and efficiently on any cloud or on-premise. Charmed Kubeflow offers a centralised, browser-based MLOps platform that runs on any conformant Kubernetes – offering enhanced productivity, improved governance and reducing the risks associated with shadow IT.

The latest release adds several features for advanced model lifecycle management, including upstream Kubeflow 1.4 and support for MLFlow integration.

Data scientists can get started today with Charmed Kubeflow 1.4 using Juju, the unified operator framework for hyper-automated management of applications running on both virtual machines and Kubernetes. The new release is in the CharmHub stable channel now, and can be deployed to any conformant Kubernetes cluster using a single Juju command.

Best-in-class MLOps, for free

Data science can be complex and demanding, but deploying and configuring the Charmed Kubeflow 1.4 MLOps platform as the data lab’s primary AI/ML infrastructure is easy.

Engineers and data scientists can rapidly set up an evaluation environment today – with or without GPU acceleration – using just a single system running MicroK8s. Evaluators can read the getting started guide – and start improving their AI automation in just 30 minutes or fewer.

Charmed Kubeflow is true free, open source software, released under the Apache License 2.0, with 24/7 support or fully managed service options available from Canonical.

Better model lifecycle management – now with Kubeflow 1.4 and MLFlow integration

Kubeflow 1.4 comes with major usability improvements over previous releases, including a unified training operator. The new training operator supports the popular AI/ML frameworks TensorFlow, MXNet, XGBoost and PyTorch. This greatly simplifies the solution, improving future extensibility and consuming fewer resources on the Kubernetes cluster.

Support for MLFlow integration has been added to the Charmed Kubeflow solution, enabling true automated model lifecycle management using MLFlow metrics and the MLFlow model registry.

MLFlow is an open source platform for AI/ML model lifecycle management, and includes features for experimentation, reproducibility, and deployment. MLFlow also offers a centralised model registry.

Data scientists and data engineers can use the MLFlow integration capability to build automatic model drift detection and trigger a Kubeflow model retraining pipeline. Model drift occurs as model accuracy starts to decline over time due to changes in the live prediction dataset versus the training dataset.

Enabling MLFlow on a Kubernetes cluster and integrating it with a Charmed Kubeflow deployment using the Juju unified operator framework is straightforward, and the MLFlow Juju operator is available in CharmHub for immediate deployment.

Better governance: improved multi-user support

Charmed Kubeflow 1.4 fully supports multi-user deployment scenarios out of the box for all Kubeflow components, including Kubeflow notebooks, pipelines, and experiments. This update simplifies using Charmed Kubeflow to improve governance and reduce the occurrence of shadow-IT environments, whilst helping to combat organisational data leakage.

The authentication provider integration guide provides more information on setting up multi-user access controls for the Charmed Kubeflow 1.4 MLOps platform.